Introduction

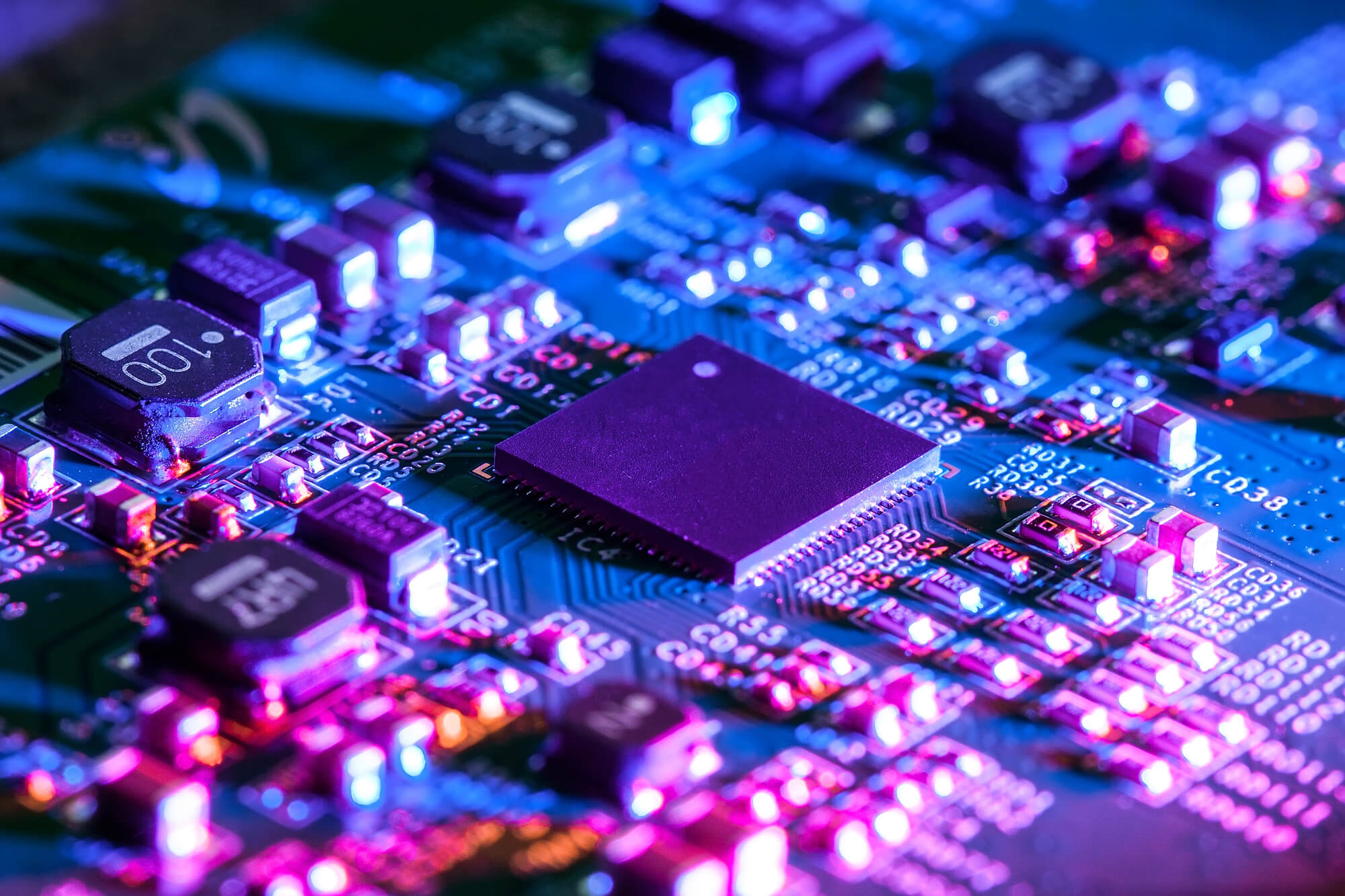

The invention and evolution of integrated circuits (ICs) is one of the most significant technological advancements in modern history. Integrated circuits have transformed from a groundbreaking invention into the industry standard, powering everything from household appliances to space technology. This article explores the origins, development, and future of ICs, shedding light on how these tiny devices revolutionized electronics and enabled the digital age.

The Invention of Integrated Circuits

In the late 1950s, as electronics technology advanced, scientists and engineers faced a challenge known as the “tyranny of numbers.” As devices grew more complex, they required more transistors, resistors, capacitors, and other components, which led to larger, more cumbersome designs and an increased risk of component failure.

Two key figures, Jack Kilby of Texas Instruments and Robert Noyce of Fairchild Semiconductor, independently invented the first integrated circuits in 1958 and 1959. Kilby developed a circuit on a single piece of germanium, integrating transistors and resistors, while Noyce’s design used silicon, allowing for easier production and scalability. Noyce’s silicon-based IC became the foundation of modern IC manufacturing.

The 1960s: The Early Growth of IC Technology

The 1960s saw rapid progress in IC technology. The U.S. Department of Defense recognized the potential of ICs and began funding projects that would help scale the technology. Early ICs, known as Small-Scale Integration (SSI) circuits, contained only a few dozen transistors and were primarily used in military applications, including missiles and early computers.

During this period, companies like Fairchild Semiconductor and Texas Instruments began producing ICs commercially. As demand grew, the industry moved toward Medium-Scale Integration (MSI), allowing ICs to hold hundreds of transistors. This era also marked the beginning of the semiconductor industry’s close connection with computers, as ICs began to replace vacuum tubes in mainframes and minicomputers.

The 1970s: The Rise of Microprocessors

The 1970s were marked by the emergence of microprocessors, which integrated the functionality of an entire computer processor onto a single chip. Intel’s 4004 microprocessor, released in 1971, was the first commercially available microprocessor, featuring 2,300 transistors and operating at a then-remarkable speed of 108 kHz. This revolutionary development paved the way for the computer revolution and personal computers.

As Large-Scale Integration (LSI) became more prevalent, ICs could house thousands of transistors, allowing for more complex circuits. The first calculators, watches, and personal computers emerged during this time, each powered by IC technology. The 1970s established the groundwork for the consumer electronics industry and marked a turning point where ICs transitioned from specialized uses to everyday applications.

The 1980s: Very Large-Scale Integration (VLSI) and the Personal Computer Boom

In the 1980s, IC technology advanced to Very Large-Scale Integration (VLSI), enabling chips to hold hundreds of thousands of transistors. This leap in transistor density allowed for the creation of more powerful and efficient processors, memory chips, and specialized ICs, revolutionizing the personal computer industry.

VLSI made possible the first IBM PCs and Macintosh computers, sparking a surge in consumer demand for personal computing. The increasing power of ICs led to advances in graphics processing, data storage, and networking capabilities, making personal computers more accessible and capable than ever before. During this period, companies like Intel, AMD, and Motorola became leading innovators, pushing the limits of IC technology and reshaping industries worldwide.

The 1990s: Portable Electronics and Digital Revolution

As the 1990s arrived, ICs continued to shrink in size and cost, while their capabilities grew exponentially. Ultra Large-Scale Integration (ULSI) emerged, allowing millions of transistors to be integrated onto a single chip. This era saw the rise of mobile phones, digital cameras, gaming consoles, and other portable devices, each made possible by advancements in ICs.

CMOS (Complementary Metal-Oxide-Semiconductor) technology became the standard for IC fabrication, providing power efficiency that was essential for portable electronics. The Internet also gained momentum in the 1990s, with ICs powering servers, routers, and modems that connected the world digitally. The combination of powerful ICs and global connectivity set the stage for the digital transformation of industries and daily life.

The 2000s: Multi-Core Processors and System-on-Chip (SoC) Designs

The 2000s introduced multi-core processors, which allowed multiple processing units on a single IC, enhancing performance for multitasking and demanding applications. System-on-chip (SoC) designs emerged, integrating CPUs, GPUs, memory, and peripherals into a single chip. SoCs became essential for smartphones, tablets, and embedded systems, as they combined multiple functions while reducing power consumption and saving space.

Moore’s Law, the observation that the number of transistors on an IC doubles approximately every two years, was still largely valid during this time, driving the continuous miniaturization and performance gains in ICs. The semiconductor industry also invested heavily in research and development, resulting in faster, more reliable, and energy-efficient chips.

The 2010s: AI, IoT, and Advanced Fabrication

The 2010s witnessed explosive growth in artificial intelligence (AI), Internet of Things (IoT), and cloud computing. These technologies demanded powerful ICs with specialized capabilities, such as graphics processing units (GPUs) for AI and application-specific integrated circuits (ASICs) for tasks like Bitcoin mining. Additionally, field-programmable gate arrays (FPGAs) allowed customizable, reprogrammable ICs suited to the diverse needs of IoT and AI applications.

3D IC fabrication also emerged, allowing chips to be stacked vertically, increasing transistor density without increasing surface area. This shift marked a new direction for Moore’s Law, allowing companies like TSMC, Samsung, and Intel to continue advancing IC capabilities even as traditional miniaturization reached physical limits.

Present Day and Future of Integrated Circuits

Today, ICs are more advanced than ever, with 5-nanometer technology now in production and 3-nanometer on the horizon. These ultra-small transistors enable cutting-edge devices such as the latest smartphones, autonomous vehicles, and medical devices. Quantum computing and neuromorphic chips are on the rise, promising to change the landscape of computing by offering new architectures that go beyond classical IC designs.

The future of ICs likely includes further development in AI-enhanced circuits, edge computing, and quantum-compatible ICs, opening possibilities we’re only beginning to understand. With every step forward, ICs will continue to serve as the foundation for technological innovation.

Conclusion

The evolution of integrated circuits from simple transistor-based circuits to powerful multi-functional chips is a testament to human ingenuity and the relentless pursuit of progress. Integrated circuits have gone from solving basic computational problems to supporting global connectivity, automation, and artificial intelligence. As the industry pushes the limits of technology, the role of ICs will only grow more influential, driving future innovations that will continue to shape the digital world.

In short, integrated circuits are the unsung heroes of the digital age—tiny yet powerful devices that have transformed technology and reshaped our lives.